| 8:00 | Registration |

| 8:20 | Opening and Welcome |

| 8:30 | General Auditory Processing Talks |

| 9:30 | Break (15 mins) |

| 9:45 | Music Perception and Production Talks |

| 11:00 | Poster Setup (15 mins) |

| 11:10 | Poster Session |

| 101-107 | General Auditory Processing |

| 108-114 | Multisensory Processing |

| 115-119 | Auditory Pathology |

| 120-123 | Auditory Development |

| 124-130 | Musical Features |

| 131-134 | Music Performance |

| 135-145 | Speech and Language |

| 146-151 | Audition and Emotion |

| 12:40 | Lunch Break (50 minutes) |

| 1:30 | Keynote Address: Isabelle Peretz (University of Montréal) |

| 2:00 | Speech Perception Talks |

| 3:00 | Break (15 minutes) |

| 3:15 | Auditory Perception Across the Lifespan Talks |

| 4:15-5:00 | Business Meeting (APCAM, APCS, and AP&C) |

Welcome to the 18th annual Auditory Perception, Cognition, and Action Meeting (APCAM 2019) at the Palais des Congrès de Montréal (Montréal Convention Centre) in Montréal! Since its founding in 2001-2002, APCAM's mission has been "...to bring together researchers from various theoretical perspectives to present focused research on auditory cognition, perception, and aurally guided action." We believe APCAM to be a unique meeting in containing a mixture of basic and applied research from different theoretical perspectives and processing accounts using numerous types of auditory stimuli (including speech, music, and environmental noises). As noted in previous programs, the fact that APCAM continues to flourish is a testament to the openness of its attendees to considering multiple perspectives, which is a principle characteristic of scientific progress.

As announced at APCAM 2017 in Vancouver, British Columbia, APCAM is now affiliated with the journal Auditory Perception and Cognition (AP&C), which features both traditional and open-access publication options. Presentations at APCAM will be automatically considered for a special issue of AP&C, and in addition, we encourage you to submit your other work on auditory science to AP&C. Feel free to stop at the Taylor & Francis exhibitor booth at the Psychonomic Society meeting for additional information or to see a sample copy.

Also announced at the Vancouver meeting is APCAM's affiliation with the Auditory Perception and Cognition Society (APCS) (https://apcsociety.org). This non-profit foundation is charged with furthering research on all aspects of audition. The $30 registration fee for APCAM provides a one-year membership for APCS, which includes an individual subscription to AP&C and reduced open-access fees for AP&C.

As an affiliate meeting of the 60th Annual Meeting of the Psychonomic Society, APCAM is indebted to the Psychonomic Society for material support regarding the meeting room, AV equipment, and poster displays. We acknowledge and are grateful for their support, and we ask that you pass along your appreciation to the Psychonomic Society for their support of APCAM.

We appreciate all of our colleagues who contributed to this year's program. We thank you for choosing to share your work with us, and we hope you will continue to contribute to APCAM in the future. This year's program features spoken sessions on speech perception, music perception and production, auditory perception across the lifespan, and general auditory processing, as well as a wide variety of poster topics. We are confident that everyone attending APCAM will find something interesting, relevant, and thought-provoking.

If there are issues that arise during the meeting, or if you have thoughts for enhancing future meetings, do not hesitate to contact any committee member. We wish you a pleasant and productive day at APCAM!

Sincerely,

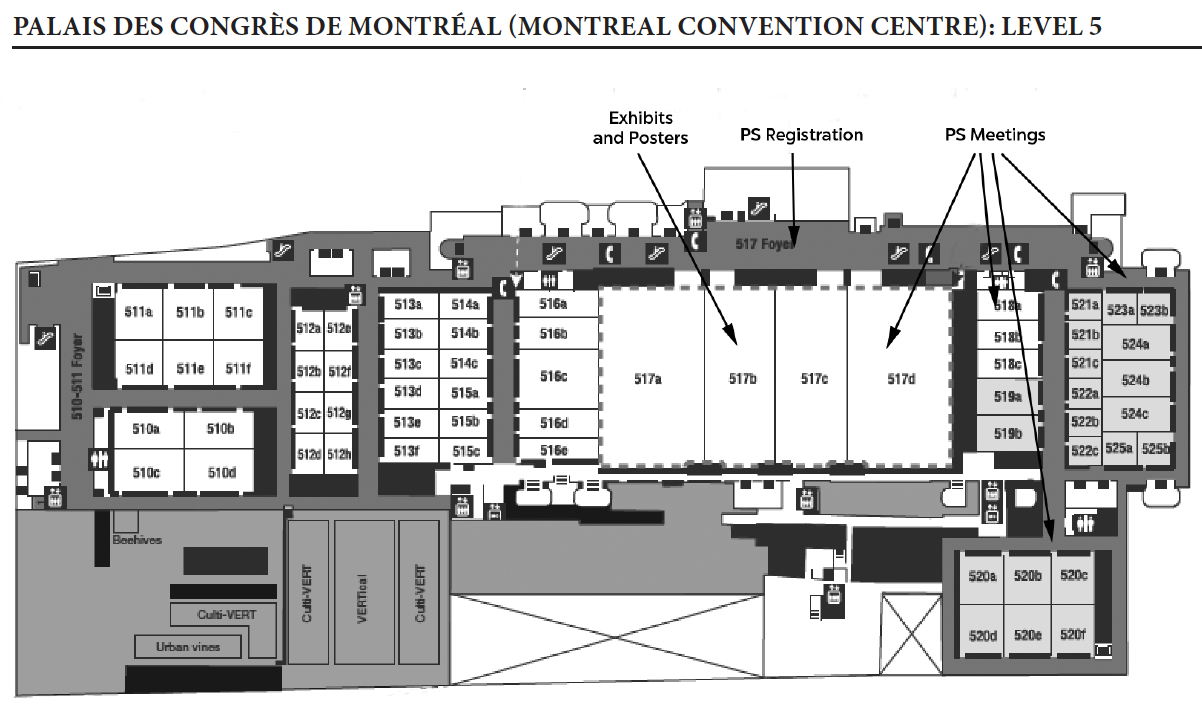

The spoken sessions and business meeting will take place on the fifth floor of the convention center in Room 519A.

The poster session will take place down the hall in Room 517B. Posters for APCAM will begin with board #101 and continue through #151.

Presentation titles are clickable links to the associated abstract.

| 8:00 | Registration | |

| 8:20 | Opening and Welcome | |

| General Auditory Processing | ||

| 8:30 | The Jingle and Jangle of Listening Effort | Julia Strand (Carleton College) |

| 8:45 | The predictive brain: Signal informativeness modulates human auditory cortical responses | Amour Simal (University of Montréal) Patrick Bermudez (University of Montréal) Christine Lefebvre (University of Montréal) François Vachon (Laval University) Pierre Jolicoeur (University of Montréal) |

| 9:00 | Incidental Auditory Learning and Memory-guided Attention: A Behavioural and Electroencephalogram (EEG) Study | Manda Fischer (University of Toronto) Morris Moscovitch (University of Toronto) Claude Alain (University of Toronto) |

| 9:15 | Acoustic features of environmental sounds that convey actions | Laurie Heller (Carnegie Mellon University) Asadali Sheikh (Carnegie Mellon University) |

| 9:30 | Morning Break (15 minutes) | |

| Music Perception and Production | ||

| 9:45 | Sung improvisation as a new tool for revealing implicit tonal knowledge in congenital amusia | Michael W. Weiss (University of Montréal) Isabelle Peretz (University of Montréal) |

| 10:00 | Physiological markers of individual differences in musicians' performance rates | Shannon Eilyce Wright (McGill University) Caroline Palmer (McGill University) |

| 10:15 | A Meta-analysis of Timbre Spaces Comparing Attack Time and the Temporal Centroid of the Attack | Savvas Kazazis (McGill University) Philippe Depalle (McGill University) Stephen McAdams (McGill University) |

| 10:30 | On Computationally Modelling the Perception of Orchestral Effects using Symbolic Score Information | Aurelien Antoine (McGill University) Philippe Depalle (McGill University) Stephen McAdams (McGill University) |

10:45 | Music & Consciousness: Shifting Representations in Memory for Melodies | W. Jay Dowling (University of Texas at Dallas) |

| 11:00 | Poster Setup | |

| 11:10 | Poster Session | |

| General Auditory Processing (101-107) | ||

| 101 | Using survival analysis to examine the impact of auditory distraction on the time-course of insight versus analytic solutions in problem solving | John E. Marsh (University of Central Lancashire) Emma Threadgold (University of Central Lancashire) Melissa E. Barker (University of Central Lancashire) Damien Litchfield (Edge Hill University) Linden J. Ball (University of Central Lancashire) |

| 102 | Confidence, learning, and metacognitive accuracy track auditory ERP dynamics | Alexandria Zakrzewski (Kansas State University) Natalie Ball (University at Buffalo) Destiny Bell (Kansas State University) Kelsey Wheeler (Kansas State University) Matthew Wisniewski (Kansas State University) |

| 103 | Impacts of pre-stimulus brain states on auditory performance | Matthew Wisniewski (Kansas State University) |

| 104 | Where are my ears? Impact of the point of observation on the perceived direction of a moving sound source | Mike Russell (Washburn University) Bryce Strickland (Washburn University) Gabrielle Kentch (Washburn University) |

| 105 | A tree falls in a forest. Does it fall hard or soft? Perception of contact type and event title on observer judgments | Carli Herl (Washburn University) Mike Russell (Washburn University) |

| 106 | Does auditory looming bias exist? Influence of participant position and response method on accuracy of arrival judgments | Gabrielle Kentch (Washburn University) Mike Russell (Washburn University) |

| 107 | Influence of Recording Device Position on Judgments of Pedestrian Direction Across Two Dimensions | Bryce Strickland (Washburn University) Mike Russell (Washburn University) |

| Multisensory Processing (108-114) | ||

| 108 | The Pupillary Dilation Response to Auditory Deviations: A Matter of Acoustical Novelty? | Alessandro Pozzi (Laval University) Alexandre Marois (Laval University) Johnathan Crépeau (Laval University) Lysandre Provost (Laval University) François Vachon (Laval University) |

| 109 | Is Auditory Working Memory More Demanding than Visual? | Joseph Rovetti (Ryerson University) Huiwen Goy (Ryerson University) Frank Russo (Ryerson University) |

| 110 | Influence of ambiguous pitch-class information on sound-color synesthesia | Lisa Tobayama (The University of Tokyo) Sayaka Harashima (The University of Tokyo) Kazuhiko Yokosawa (The University of Tokyo) |

| 111 | Plasticity in color associations evoked by musical notes in a musician with synesthesia and absolute pitch | Cathy Lebeau (Université du Québec à Montréal) François Richer (Université du Québec à Montréal) |

| 112 | The influence of timbre of musical instruments and ensembles on an associative color-sound perception | Elena Nikolaevna Anisimova (Waldorf School Backnang) |

| 113 | External validation of the Battery for the Assessment of Sensorimotor Auditory and Timing Abilities (BAASTA) with the Montreal-Beat Alignment Test (M-BAT) for the assessment of synchronization to music | Ming Ruo Zhang (University of Montréal) Véronique Martel (University of Montréal) Isabelle Peretz (University of Montréal) Simone Dalla Bella (University of Montréal) |

| 114 | Tablet version of the Battery for the Assessment of Auditory Sensorimotor and Timing Abilities (BAASTA) | Hugo Laflamme (University of Montréal) Mélody Blais (University of Montréal) Naeem Komeilipoor (University of Montréal) Camille Gaillard (University of Montréal) Melissa Kadi (University of Montréal) Agnès Zagala (University of Montréal) Simon Rigoulot (University of Montréal) Sonja Kotz (University of Maastricht & Max Planck Institute for Human Cognitive and Brain Sciences) Simone Dalla Bella (University of Montréal) |

| Auditory Pathology (115-119) | ||

| 115 | Effects of Auditory Distraction on Cognition and Eye Movements in Adults with Mild Traumatic Brain Injury | Ileana Ratiu (Midwestern University) Miyka Whiting (Midwestern University) |

| 116 | Head Injuries and the Hearing Screening Inventory | Chris Koch (George Fox University) Abigail Anderson (George Fox University) |

| 117 | Can rhythmic abilities distinguish neurodevelopmental disorders? | Camille Gaillard (University of Montréal) Mélody Blais (University of Montréal) Frédéric Puyjarinet (University of Montpellier) Valentin Bégel (McGill University) Régis Lopez (National Reference Center for Narcolepsy and Idiopathic Hypersomnia) Madeline Vanderbergue (University of Lille) Marie-Pierre Lemaître (University Hospital of Lille) Quentin Devignes (University of Lille) Delphine Dellacherie (University of Lille) Simone Dalla Bella (University of Montréal) |

| 118 | A Case Study in Unilateral Pure Word Deafness | Colin Noe (Rice University) Simon Fischer-Baum (Rice University) |

| 119 | Auditory Attentional Modulation in Hearing Impaired Listeners | Jason Geller (University of Iowa) Inyong Choi (University of Iowa) |

| Development (120-123) | ||

| 120 | Bilingual and monolingual toddlers can detect mispronunciations, regardless of cognate status | Esther Schott (Concordia University) Krista Byers-Heinlein (Concordia University) |

| 121 | Does the structure of the lexicon change with age? An investigation using the Auditory Lexical Decision Task | Kylie Alberts (Union College) Brianne Noud (Washington University in St. Louis) Jonathan Peelle (Washington University in St. Louis) Chad Rogers (Union College) |

| 122 | The temporal dynamics of intervention for a child with delayed language | Schea Fissel (Midwestern University, Glendale) Ida Mohebpour (Midwestern University, Glendale) Ashley Nye (Midwestern University, Glendale) |

| 123 | Investigating the melodic pitch memory of children with autism spectrum disorder | Samantha Wong (McGill University) Sandy Stanutz (McGill University) Shalini Sivathasan (McGill University) Emily Stubbert (McGill University) Jacob Burack (McGill University) Eve-Marie Quintin (McGill University) |

| Musical Features (124-130) | ||

| 124 | Bowed plates and blown strings: Odd combinations of excitation methods and resonance structures impact perception | Erica Huynh (McGill University) Joël Bensoam (IRCAM) Stephen McAdams (McGill University) |

| 125 | Evaluation of Musical Instrument Timbre Preference as a Function of Materials | Jacob Colville (James Madison University) Michael Hall (James Madison University) |

| 126 | The Spatial Representation of Pitch in Relation to Musical Training | Asha Beck (James Madison University) Michael Hall (James Madison University) Kaitlyn Bridgeforth (James Madison University) |

| 127 | The Pythagorean Comma and the Preference for Stretched Octaves | Timothy L. Hubbard (Arizona State University and Grand Canyon University) |

| 128 | The Root of Harmonic Expectancy: A Priming Study | Trenton Johanis (University of Toronto) Mark Schmuckler (University of Toronto) |

| 129 | WITHDRAWN—Perception of Beats and Temporal Characteristics of the Auditory Image | Sharathchandra Ramakrishnan (University of Texas at Dallas) |

| 130 | Knowledge of Western popular music by Canadian- and Chinese-born university students: the extent of common ground | Annabel Cohen (University of Prince Edward Island) Corey Collett (University of Prince Edward Island) Jingyuan Sun (University of Prince Edward Island) |

| Music Performance (131-134) | ||

| 131 | Generalization of Novel Sensorimotor Associations among Pianists and Non-pianists | Chihiro Honda (University at Buffalo, SUNY) Karen Chow (University at Buffalo, SUNY) Emma Greenspon (Monmouth University) Peter Pfordresher (University at Buffalo, SUNY) |

| 132 | WITHDRAWN—Singing training improves pitch accuracy and vocal quality in novice singers | Dawn Merrett (The University of Melbourne) Sarah Wilson (The University of Melbourne) |

| 133 | Musical prodigies practice more during a critical developmental period | Chanel Marion-St-Onge (University of Montréal) Megha Sharda (University of Montréal) Michael W. Weiss (University of Montréal) Margot Charignon (University of Montréal) Isabelle Peretz (University of Montréal) |

| 134 | A case study of prodigious musical memory | Margot Charignon (University of Montréal) Chanel Marion-St-Onge (University of Montréal) Michael Weiss (University of Montréal) Isabelle Héroux (Université du Québec à Montréal) Megha Sharda (University of Montréal) Isabelle Peretz (University of Montréal) |

| Speech and Language (135-145) | ||

| 135 | What Accounts for Individual Differences in Susceptibility to the McGurk Effect? | Lucia Ray (Carleton College) Naseem Dillman-Hasso (Carleton College) Violet Brown (Washington University in St. Louis) Maryam Hedayati (Carleton College) Annie Zanger (Carleton College) Sasha Mayn (Carleton College) Julia Strand (Carleton College) |

| 136 | Fricative/affricate perception measured using auditory brainstem responses | Michael Ryan Henderson (Villanova University) Joseph Toscano (Villanova University) |

| 137 | Vowel-pitch interactions in the perception of pitch interval size | Frank Russo(Ryerson University) Dominique Vuvan (Skidmore College) |

| 138 | Interpretation of pitch patterns in spoken and sung speech | Shannon Heald (University of Chicago) Stephen Van Hedger (University of Western Ontario) Howard Nusbaum (University of Chicago) |

| 139 | How binding words with a musical frame improves their recognition | Agnès Zagala (University of Montréal) Séverine Samson (University of Lille) |

| 140 | Exploring Linguistic Rhythm in Light of Poetry: Durational Priming of Metrical Speech in Turkish | Züheyra Tokaç (Bogazici University) Esra Mungan (Bogazici University) |

| 141 | The effects of item and talker variability on the perceptual learning of time-compressed speech following short-term exposure | Karen Banai (University of Haifa) Hanin Karawani (University of Haifa) Yizhar Lavner (Tel-Hai College) Limor Lavie (University of Haifa) |

| 142 | The effect of interlocutor voice context on bilingual lexical decision | Monika Molnar (University of Toronto) Kai Leung (University of Toronto) |

| 143 | Manual directional gestures facilitate speech learning | Anna Zhen (New York University Shanghai) Stephen Van Hedger (University of Chicago) Shannon Heald (University of Chicago) Susan Goldin-Meadow (University of Chicago) Xing Tian (New York University Shanghai) |

| 144 | Social Judgments about Speaker Confidence and Trust: A Computer Mouse-Tracking Paradigm | Jennifer Roche (Kent State University) Shae D. Morgan (University of Louisville) Erica Glynn (Kent State University) |

| 145 | Women are Better than Men in Detecting Vocal Sex Ratios | John Neuhoff (The College of Wooster) |

| Audition and Emotion (146-151) | ||

| 146 | Impact of Affective Vocal Tone on Social Judgments of Friendliness and Political Ideology | Erica Glynn (Kent State University) Thimberley Morgan (Kent State University) Rachel Whitten (Kent State University) Madison Knodell (Kent State University) Jennifer Roche (Kent State University) |

| 147 | Emotive Attributes of Complaining Speech | Maël Mauchand (McGill University) Marc Pell (McGill University) |

| 148 | Effects of vocally-expressed emotions on visual scanning of faces: Evidence from Mandarin Chinese | Shuyi Zhang (McGill University) Pan Liu (McGill University; Western University) Simon Rigoulot (McGill University; Université du Québec à Trois-Rivières) Xiaoming Jiang (McGill University; Tongji University) Marc Pell (McGill University) |

| 149 | WITHDRAWN—Perception of emotion in West African indigenous music | Cecilia Durojaye (Max Planck Institute for Empirical Aesthetics) |

| 150 | Timbral, temporal, and expressive shaping of musical material and their respective roles in musical communication of affect | Kit Soden (McGill University) Jordana Saks (McGill University) Stephen McAdams (McGill University) |

| 151 | Emotional responses to acoustic features: Comparison of three sounds produced by Ram’s horn (Shofar) | Leah Fostick (Ariel University) Maayan Cytrin (Ariel University) Eshkar Yadgar (Ariel University) Howard Moskowitz (Mind Genomics Associates, Inc.) Harvey Babkoff (Bar-Ilan University) |

| 12:40 | Lunch Break (50 minutes) | |

| Keynote Address | ||

| 1:30 | The extraordinary variations of the musical brain | Isabelle Peretz (University of Montréal) |

| Speech Perception and Production | ||

| 2:00 | Working Memory Is Associated with the Use of Lip Movements and Sentence Context During Speech Perception in Noise in Younger and Older Bilinguals | Alexandre Chauvin (Concordia University) Natalie Phillips (Concordia University) |

| 2:15 | Inhibition of articulation muscles during listening and reading: A matter of modality of input and intention to speak out loud | Naama Zur (University of Haifa) Zohar Eviatar (University of Haifa) Avi Karni (University of Haifa) |

| 2:30 | Cup! Cup? Cup: Comprehension of Intentional Prosody in Adults and Children | Melissa Jungers (The Ohio State University) Julie Hupp (The Ohio State University) Celeste Hinerman (The Ohio State University) |

| 2:45 | Interrelations in Emotion and Speech Physiology Lead to a Sound Symbolic Case | Shin-Phing Yu (Arizona State University) Michael McBeath (Arizona State University) Arthur Glenberg (Arizona State University) |

| 3:00 | Afternoon Break (15 minutes) | |

| Multisensory and Developmental | ||

| 3:15 | Affective interactions: Comparing auditory and visual components in dramatic scenes | Kit Soden (McGill University) Moe Touizrar (McGill University) Sarah Gates (Northwestern University) Bennett K. Smith (McGill University) Stephen McAdams (McGill University) |

| 3:30 | Auditory and Somatosensory Interaction in Speech Perception in Children and Adults | Paméla Trudeau-Fisette (Université du Québec à Montréal) Camille Vidou (Université du Québec à Montréal) Takayuki Ito (Université du Québec à Montréal) and Lucie Ménard (Université du Québec à Montréal) |

| 3:45 | Rhythmic determinants of developmental dyslexia | Valentin Begel (Lille University) Simone Dalla Bella (University of Montréal) Quentin Devignes (Lille University) Madeline Vanderbergue (Lille University) Marie-Pierre Lemaitre (University Hospital of Lille) Delphine Dellacherie (Lille University) |

| 4:00 | The effectiveness of musical interventions on cognition in children with autism spectrum disorder: A systematic review and meta-analysis | Kevin Jamey (University of Montréal) Nathalie Roth (University of Montréal) Nicholas E. V. Foster (University of Montréal) Krista L. Hyde (University of Montréal) |

| 4:15-5:00 | Business Meeting (APCAM, APCS, and AP&C) | |

There is increasing concern that findings in the psychological literature may not be as robust or replicable as previously thought. The "replication crisis” that has struck psychology and other sciences is likely to be the result of a host of factors including questionable research practices, the incentive structure of science, and the file-drawer problem (see Nelson et al. 2018 for a recent review). Another factor that may contribute to inconsistencies in the literature is variability in measurement (Flake & Fried 2019). Indeed, researchers may choose to operationalize constructs that cannot be directly measured (e.g., "musical skill," "happiness," "working memory") in a host of ways. This inconsistency in measurement has the potential to lead to what has been referred to as the jingle fallacy—falsely assuming two tasks or scales measure the same underlying construct because they have the same name—or the jangle fallacy—falsely assuming two tasks or scales measure something different because they have different names (see Block 2000; Flake & Fried 2019; Thorndike 1904). Here, we present recent research from our lab on the construct of Listening Effort—"the deliberate allocation of mental resources to overcome obstacles in goal pursuit when carrying out a [listening] task" (Pichora-Fuller et al., 2016).This work demonstrates that multiple listening effort tasks that are commonly used in the literature and assumed to measure the same underlying construct are in fact only very weakly related to one another (demonstrating "jingle"). In addition, we show that measures that are intended to quantify listening effort may instead be tapping into more domain-general, cognitive abilities like working memory (demonstrating "jangle"). We also offer concrete suggestions for other researchers to evaluate the measurement of the constructs they study to help them avoid jingle and jangle in their own work.

Back to scheduleRapid, pre-attentive prediction, coding of prediction error, and disambiguation of stimuli seems to be of particular importance in the auditory domain. We aimed to observe how information is carried in a tone sequence, and how it modulates cortical responses. To do this, we used a standard short-term memory (STM) task. Participants heard two sequences of 1, 3, or 5 tones (200 ms on, 200 ms off) interspersed by a silent interval (2 s). They decided whether the two sequence were the same or different. In a first experiment, the length of the tone sequences was randomized between trials. During the first sequence, the amplitude of the auditory P2 was larger for the second tone in trials with 3 tones, and for the second and fourth tones in trials with 5 tones. We hypothesize the increase in P2 reflected a dynamic disambiguation process because these tones were predictive of a sequence longer than 1 or 3 tones. This hypothesis was supported by the absence of P2 amplitude modulation during the second sequence (when sequence length was already known). In a second experiment, we blocked trials by sequence length to ensure the effects were not caused by some process related to encoding in STM. There was no P2 amplitude modulation in either the first or second sequences. Thus, tones 2 and 4 had a larger amplitude only when they provided new information about the length of the current tone sequence. To some extent, the auditory N1 also showed those modulations. These results suggest a rapid dynamic adaptation of auditory cortical responses related to contextually-determined disambiguating information on a very short timescale.

Back to scheduleCan implicit (non-conscious) associations facilitate auditory target detection? Participants were presented with 80 different audio-clips of familiar sounds, in which half of the clips included a lateralized pure tone. Participants were only told to classify audio-clips as natural (i.e. waterfall) or manmade (i.e. airplane engine). After a delay, participants took a surprise memory test in which they were presented with old and new audio-clips and asked to press a button to detect a lateralized faint pure tone (target) embedded in each audio-clip. On each trial, they also indicated if the clip was (i) old or new; (ii) recollected or familiar; and (iii) if the tone was on the left, right, or not present when they heard the audio-clip prior to the test. The results show good explicit memory for the clip, but not for the tone location or tone presence. Target detection was also faster for old clips than for new clips but did not vary as a function of the association between spatial location and audio-clip. Neuro-electric activity at test, however, revealed an old-new effect at midline and frontal sites as well as a significant a difference between clips that had been associated with the location of the target compared to those that were not associated with it. These results suggest that implicit associations were formed that facilitate target processing irrespective of location. The implications of these findings in the context of memory-guided attention are discussed.

Back to scheduleHow do humans use acoustic information to understand what events are occurring in their environment? Although there are countless environmental sounds, there exist a manageable number of types of physical interactions that produce sounds, such as impacts, scrapes and splashes (e.g. Gaver, 1993). To address how listeners may detect and utilize the classes of causal interactions that produce sounds, listeners classified a wide variety of sound events according to their actions in two experiments. In the first experiment, listeners judged a variety of small-scale, human-generated events made with solids, liquids, and/or air (Heller and Skerritt, APCAM, Boston, MA, 2009; the Sound Events Database, http://www.auditorylab.org). In the second experiment, listeners judged a wider variety of human-, animal- and machine-generated events (ESC-50 Environmental Sound Database, Piczak, Proceedings of the 23rd ACM International Conference on Multimedia, Brisbane, Australia, 1015-1018, 2015. DOI 10.1145/2733373.2806390.) In both experiments, listeners were asked to assess the causal likelihood of the sounds being created by various action-related verbs. Cluster analyses reveal how the sounds were organized with respect to the actions they convey to listeners. Acoustic analysis of the sounds incorporated spectral features (e.g. harmonic partials), temporal features(e.g. modulation rate), and spectro-temporal features (e.g. frequency variation of spectral peaks across repeated transients). First, an effort was made to connect these acoustic features to physical causal processes that differentiated classes of sounds. Next, discriminant analyses showed which actions were most important in classifying and discriminating the sounds. Finally, a small set of acoustic variables were able to differentiate the behaviorally-derived sound groups, suggesting the possibility of a small set of higher-order auditory features that typify each causal sound-producing event.

Back to scheduleIndividuals with congenital amusia have notorious difficulties detecting out-of-key notes. A recent model of amusia proposes that this deficit is caused by disruption of conscious access to tonal knowledge, rather than absence of tonal knowledge (Peretz, 2016, TiCS). Interestingly, some amusics sing well-known songs in tune. In one case, an amusic improvised melodies that were mostly in-key, although not the intended task (Peretz, 1993, Cog. Neuropsych.). The case described by Peretz (1993) is noteworthy because, by definition, an improvisation does not rely on a melodic template, and any observed tonality cannot be attributed to rehearsal or feedback from others. In the current study, we examined improvisations by asking a group of amusics to sing novel melodies in response to verbal prompts or in continuation of melodic stems (n=28 improvisations; M=44.1±33.8 notes/improvisation). Data collection is ongoing, but includes 14 amusics and 8 nonmusician controls. In order to assess the extent to which each improvisation reflects internal representation of key membership, we calculated the proportion of in-key notes (PIK) in the nearest key (Krumhansl-Schmuckler), which was z-transformed using a randomly-generated null distribution (zPIK). For each participant, we calculated the proportion of improvisations that respected a given key better than chance (i.e., zPIK>0). The proportion was above chance (i.e., proportion of 0.5) for 12 of 14 amusics (M=0.62±0.15) and 7 of 8 controls (M=0.80±0.21). On a group level, the average zPIK score was greater than chance (i.e., zPIK of 0) for both amusics (M=0.61±0.95) and controls (M=1.90±1.50), and controls had a higher average zPIK score than amusics. The results confirm that amusics have acquired tonal knowledge without awareness but that this knowledge may be partial compared to typical nonmusicians. This fulfills a key prediction of Peretz (2016) and demonstrates the utility of sung improvisation as a method to study implicit musical knowledge.

Back to scheduleSpontaneous motor production rates are observed when individuals perform music. Individual differences in these spontaneous rates exist, yet factors that contribute to these individual differences remain largely unidentified. Biological oscillations such as circadian and cardiac rhythms can be influenced by music performance tasks. This study investigates whether physiological markers can explain individual differences in musicians' spontaneous production rates. Twenty-four trained pianists performed melodies at four testing sessions in a single day (09, 13h, 17h, 21h). At each testing session, 5-minute baseline measurements of heart rate (RR or beat-to-beat intervals) were recorded; heart rate was also measured during piano performance. Pianists performed a familiar melody and an unfamiliar melody at regular, spontaneous (unpaced) rates at each testing session. Chronotype measures (morning or evening type) were collected at the final session. Spontaneous performance rates were slowest at 09h in the sample of primarily evening chronotypes. Temporal variability did not change significantly across the testing times. Performance rates within individuals were largely stable across testing sessions, with faster performers remaining faster across the day and slower performers remaining slower across the day. Heart rate was faster and heart rate variability was reduced during music performances relative to baseline measurements. Autorecurrence quantification analysis on the RR heart beat intervals indicated more deterministic patterns in cardiac fluctuations during music performance than during baseline. Moreover, individual pianists' timing variability in performances of the unfamiliar piece correlated with greater determinism in cardiac rhythms. These results suggest that performance rates are stable across the day and that dynamic patterns in cardiac rhythms may differentiate individuals' performances based on their temporal features.

Back to scheduleAttack time (or rise time) is considered to be one of the most important temporal audio features for explaining dissimilarity ratings between pairs of sounds. In several timbre studies, it has been shown to correlate strongly with one perceptual dimension revealed by multidimensional scaling analysis (MDS) on the dissimilarity ratings. An ordinal scaling experiment was conducted to test whether listeners are able to rank order harmonic stimuli with varying attack times and temporal centroids of the attack (i.e., the center of gravity of the amplitude envelope of a signal during the attack portion). The attack times had a range of 40 to 500 ms, but the decay times were kept constant. Each stimulus set of attack temporal centroids was constructed with fixed attack (range = 40-500 ms) and decay times, but they varied in the shape of the amplitude envelope during the attack portion. Although there were many confusions in ordering stimuli with short attack times, in most cases listeners could correctly order stimuli with varying temporal centroids even at very short attack times. These results led us to conduct a meta-analysis of six timbre spaces (Grey, 1977; Grey & Gordon, 1978; Iverson & Krumhansl, 1993; McAdams et al., 1995; Lakatos, 2000) in which we compared the explanatory power of attack time with the temporal centroid of the attack. The analysis showed that the attack temporal centroid correlated with a given MDS dimension equally or stronger than attack time itself, and that in some cases the differences between the two correlation coefficients were significant (p < 0.05). With respect to the results of the ordinal scaling experiment, the meta-analysis of timbre spaces indicates that the attack temporal centroid is a robust audio feature for explaining dissimilarity ratings along a temporal perceptual dimension.

Back to scheduleOrchestration is a musical practice that involves combining the acoustic properties of several instruments. This results in creating perceptual effects that are prominent in orchestral music, such as blend, segregation, and orchestral contrasts to name but three. Here, we present our approach for modelling the perception of orchestral effects that result from three auditory grouping processes, namely concurrent, sequential, and segmental grouping. Moreover, we discuss how findings from research on auditory scene analysis and music perception experiments informed the formulation of rules for the development of computer models capable of detecting and identifying such perceptual effects from the only symbolic information of musical score. Based on the modelling of orchestral blend as a case study, we detail the benefits and performances of applying such rules to the processing of musical information. The models obtained an average accuracy score of 81%, established by comparing the computer output of our models on several orchestral excerpts to annotations defined by musical experts. However, we also explain the limitations of using rules based solely on symbolic information. Combining instrumental characteristics inherently comprises manipulating timbral properties that are essentially not represented in symbolic information of scores, highlighting the need to perform and incorporate data resulting from audio analyzes in order to completely grasp the parameters responsible for the perception of orchestral effects.

Back to scheduleWe have a very vivid experience of music hear for the first time. However, a growing body of convergent research shows that our memory for it varies within the first minute after our experience, and that the memories of nonmusicians, moderately trained musicians, and professional musicians differ. All three groups hear familiar melodies in terms of scale-step values. However, only listeners with musical training automatically hear the pitches of unfamiliar melodies as scale steps, and that encoding takes time of the order of 10 s after first hearing. When tested 4-5 s after hearing a melody followed by continuing music, both nonmusicians and moderate musicians confuse targets with lures in which the melody has been shifted to a different place on the scale (with changes in pitch-to-pitch intervals). If that test is delayed another 10 s, both groups reject the same-contour lures better, and that performance is especially good with the moderate musicians. This result converges with the failure of both groups to notice out-of-key wrong notes in unfamiliar melodies; even if they were encoding scale-step values, that process is too slow to provide a timely response time to an out-of-key note. Furthermore, noticing a tonal modulation supposes the tracking of relative strength of scale-step values moment to moment. When listeners track modulations in both Western and South Indian melodies using the continuous-probe-tone paradigm, nonmusicians’ tonal profiles generally fail to show the effects of the modulations. Moderate musicians show them, and professionals show them even more strongly. But even for professionals, the shift in the tonal profiles continues to grow even after the first 15 s of the modulation. Taken together, this evidence suggests that even professionals will remember hearing a piece with firmly grounded tonal structure, even though their initial immediate experience of the piece lacked that structure.

Back to scheduleThe past decade of research has provided compelling evidence that musicality is a fundamental human trait, and its biological basis is increasingly scrutinized. In this endeavor, the detailed study of individuals who have musical deficiencies--congenital amusia--or exceptional talents--musical prodigies--are instructive because of likely neurogenetic underpinnings. I will review key points that have emerged during recent years regarding the neurobiological foundations of these extraordinary variations of musicality.

Back to scheduleDespite ubiquitous background noise, most people perceive speech successfully. This may be explained in part by a combination of supporting mechanisms. For example, visual speech cues (e.g., lip movements) and sentence context typically improve speech perception in noise. While the beneficial nature of these cues is well documented in native listeners, comparatively little is known for non-native listeners who may have less developed linguistic knowledge in their second language and are typically more impaired by background noise. Furthermore, older bilinguals may be at a disadvantage, as they also have to manage sensory changes such as presbycusis and/or changes in visual acuity. We are investigating the extent to which French-English/English-French bilinguals in Montreal benefit from visual speech cues and sentence context in their first (L1) and second language (L2). Participants were divided into three groups: young adults (18-35 years), older adults (65+) with normal hearing, and older adults with hearing loss. All participants were presented with audio-video recorded sentences in noise (twelve-talker babble); they had to repeat the terminal word of each sentence. Half of the sentences offered moderate levels of contextual information (e.g., "In the mail, he received a letter.”; MC), while 50% offered little context (e.g., "She thought about the letter.”; LC). Furthermore, the sentences were presented in three modalities: visual, auditory, and audiovisual. Participants were more accurate in L1 compared to L2, and for MC sentences compared to LC sentences. However, all groups benefited from visual speech cues and sentence context to similar extents. This benefit, however, was differentially moderated by visual working memory performance in young adults compared to older adults with normal hearing, suggesting that working memory capacity plays a role in the extent to which bilinguals benefit from the combination of visual speech cues and sentence context and that this relationship changes across the lifespan.

Back to scheduleIn both hand movements and articulation, intended acts can affect the way current acts are executed. Thus, the articulation of a given speech sound is often contingent on the intention to produce subsequent sounds (co-articulation). Here we show that the intention to subsequently repeat a short sentence, overtly or covertly, significantly modulated the articulatory musculature already during listening or reading (i.e., during the input phase) the sentence. Young adults were instructed to read (presented as whole sentences or word-by-word) or listen to recordings of sentences to be repeated afterward. Surface electromyography (sEMG) recordings showed significant reductions in articulatory muscle activity, above the orbicularis-oris and the sternohyoid muscles, compared to baseline, during the input phase. These temporary reductions in EMG activity were contingent on the intention to subsequently repeat the input overtly or covertly, but also on the input modality. Thus, there were different patterns of activity modulations before the cue to respond, depending on the input modality and the intended response. Only when repetition was to be overt, a significant build-up of activity occurred after sentence presentation, before the cue to respond; this build-up was most pronounced when the sentence to be repeated was heard. Neurolinguistic models suggest that language perception and articulation interact; the current results suggest that the interaction begins already during the input phase, listening or reading, and reflects the intended responses.

Back to scheduleProsody is the way something is spoken. Adults and children regularly use prosody in language comprehension and production, but much research focuses on emotion or on syntactic interpretation. The current study focuses on comprehension of intentional prosody devoid of semantic information. Adults (n = 72) and children (n = 72) were asked to identify the referent of an isolated label (familiar or nonsense words) based on the varying prosodic information alone. Labels were spoken with intentional prosody (warn, doubt, name). Adults and children selected the intended referent at above chance levels of performance for both word types and all intentions, with adults performing faster and more accurately than children. Overall, this research demonstrates that children and adults can successfully determine the intention behind words and nonsense words, even if they are in isolation. This adds support to the idea that prosody alone can convey meaning.

Back to scheduleTwo experiments explore how emotion physiology can affect language iconicity. We examined how patterns of facial muscle activity (FMA) may lead to associating affect with specific phonemic utterances. We measured the increase in perception of positive affect for the /i:/ vowel sound (as in "gleam”) and negative affect for the /^/ sound (as in "glum") for non-words (NWs). English single-syllable NWs containing /i:/ or /^/ were rated on valence -5, -3, -1, +1, +3, or +5. We found /i:/ NWs were rated significantly above 0, and /^/ NWs significantly below 0, confirming a bidirectional emotional association. We then tested whether verbal articulation of NWs containing these vowel sounds with confirmed emotional valence ratings corresponded with consistent affective FMA. We recorded FMA using EMG while participants read NWs. Regression analysis revealed a relation between valence rating of the NWs and FMA. Our confirmation of a relationship between emotion and speech physiology can explain a type of sound symbolism. FMA associated with particular emotions appears to favor production of specific phonemic sounds. The findings support that human physiology associated with emotions likely affects word meanings in a non-arbitrary manner.

Back to scheduleLinks between music and emotion in dramatic art works (film, opera or other theatrical forms) have long been recognized as integral to a work's success. A key question concerns how the auditory and visual components in dramatic works correspond and interact in the elicitation of affect. We aim to identify and isolate the components of dramatic music that are able to clearly represent basic emotional categories using empirical methods to isolate and measure auditory and visual interactions. By separating visual and audio components, we are able to control for, coordinate, and compare their individual contributions. With stimuli from opera and film noir, we collected a rich set of data (N=120) using custom software that was developed to enable participants to successively record real-time emotional intensity, to create event segmentations and to apply overlapping, multi-level affect descriptor labels to stimuli in audio-only, visual-only and combined audiovisual conditions. Our findings suggest that intensity profiles across conditions are similar, but that the auditory-only component is rated stronger than the visual-only or audiovisual components. Descriptor data show congruency in responses based on six basic emotion categories and suggest that the audio-only component elicits a wider array of affective responses, whereas the visual-only and audiovisual conditions elicit more consolidated responses. These data will enable a new type of musical analysis based entirely on emotional intensity and emotion category, with applications in music perception, music theory, composition, and musicology.

Back to scheduleMultisensory integration allows us to link sensory cues from multiple sources and plays a crucial role in speech development. However, it is not clear whether humans have an innate ability or whether repeated sensory input while the brain is maturing leads to efficient integration of sensory information in speech. We investigated the integration of auditory and somatosensory information in speech processing in a bimodal perceptual task in 15 young adults (age 19 to 30) and 14 children (age 5 to 6). The participants were asked to identify if the perceived target was the sound /e/ or /ø/. Half of the stimuli were presented under a unimodal condition with only auditory input. The other stimuli were presented under a bimodal condition with both auditory input and somatosensory input consisting of facial skin stretches provided by a robotic device, which mimics the articulation of the vowel /e/. The results indicate that the effect of somatosensory information on sound categorization was larger in adults than in children. This suggests that integration of auditory and somatosensory information evolves throughout the course of development.

Back to scheduleTemporal accounts of Developmental Dyslexia (DD) postulate that a general predictive timing impairment plays a critical role this disease. However, DD is characterized by timing disorders as well as cognitive and motor dysfunctions. It is still unclear whether non-verbal timing and rhythmic skills per se might be good predictors of DD. This study investigated the independent contribution of timing to developmental dyslexia (DD) beside motor and cognitive dysfunctions typically impaired in DD. We submitted children with DD (aged 8-12) and controls to perceptual timing, finger tapping, fine motor control, as well as attention and executive tasks. Children with DD's performance was poorer than controls in most of these tasks. Predictors of DD, as found with logistic regression modeling, were beat perception and precision in tapping to the beat, which are both predictive timing variables, children's tapping rate, and mental flexibility. These data support temporal accounts of DD in which predictive timing impairments related to dysfunctional brain oscillatory mechanisms partially explain the core phonological deficit, independently from general motor and cognitive functioning. On top of this, we provide strong evidence that DD's deficits in predictive timing are not a by-product of other timing (i.e., perception of durations) or global dysfunction in DD.

Back to scheduleThere is considerable interest in using music interventions (MIs) to address core impairments present in children and adolescents with autism spectrum disorder (ASD). An increasing number of studies suggest that MIs have positive outcomes in this population, but no systematic review employing meta-analysis to date has investigated the efficacy of MIs across three of the predominant symptoms in ASD, specifically social functioning, maladaptive behaviors and language impairments. A systematic evaluation was performed across 17 peer-reviewed studies comparing MIs with non-music interventions (NMIs) in ASD children. Quality assessment was also undertaken based on the CONSORT statement. Eleven studies fulfilled inclusion criteria for meta-analysis, and these quantitative analyses results supported the effectiveness of MI in ASD, particularly for measures sensitive to social maladaptive behaviors. Comparisons further suggested benefits of MIs over NMIs for social outcomes, but not non-social maladaptive or language outcomes. Methodological issues were common in studies, such as small sample sizes, restricted durations and intensities of interventions, missing sample information and matching criteria, and attrition bias. Together, the combined systematic review and meta-analyses presents an up-to-date evaluation of the evidence for MI's benefits in ASD children. Key recommendations are provided for future clinical interventions and research on MI in ASD.

Back to scheduleWe report a study that applied survival analysis to examine theory-based predictions relating to the impact of auditory distraction on the time-course of insight versus analytic solutions in problem solving with both verbal and visuo-spatial tasks. The study involved a within-participants design that manipulated task type (problems typically solved via insight vs. ones typically solved via analysis), solution modality (verbal vs. visuo-spatial) and auditory distraction (to-be-ignored background speech vs. quiet). When participants offered a solution they also self-reported their solution strategy (i.e., whether it was more insight-based or analysis-based). We also took measures of working memory capacity as exploratory variables: verbal (operation span) and spatial (symmetry span). Our survival analysis shows how the time-course of solution generation via insight versus analysis is impacted by auditory distraction in ways that are predicted by problem-solving theories that recognise the interplay between: (i) implicit restructuring processes; and (ii) explicit executive processes.

Back to poster listingRecent research has focused on measuring neural correlates of metacognitive judgments in decision and post-decision processes during memory retrieval and categorization. However, many tasks may require monitoring of earlier sensory/perceptual processes. In the ongoing research described here, we are examining the neural correlates of confidence, learning, and metacognition in simple auditory tasks. In Study 1, participants indicated which of two intervals contained an 80-ms pure tone embedded in white noise. Tone-locked event-related potentials (ERPs) were used to investigate the processing stages related to confidence. N1, P2, and P3 amplitudes were larger for high- compared to low-confidence trials, indicating that processing at relatively early (N1) and late (P3) stages are associated with confidence judgments. Study 2 examined whether improvements in auditory detection and plasticity in the ERP, as a result of perceptual learning, were associated with changes in confidence ratings. As in Study 1, participants were trained to detect either an 861-Hz or 1058-Hz tone in noise. EEG was recorded during the presentation of trained and untrained frequency tones during active detection and passive exposure to sounds. During the active detection portion, accuracy, confidence ratings, P2, and P3 amplitudes were higher for trained compared to untrained tones. Further, the P2 amplitude effect remained, even under passive exposure to trained and untrained tones. Study 3 examines the relationship between metacognitive accuracy (meta-d') and the ERP features found to be associated with confidence in Studies 1 and 2. Current data support the trend that meta-d' tracks individual differences in ERPs. Participants with better metacognitive accuracy show larger overall amplitude differences between high- and low-confidence trials. We suggest that metacognitive judgments can track both sensory- and decision-related processes as well as perceptual learning. Additionally, differences in how the brain processes auditory signals may predict one's ability to monitor their own performance through confidence judgments.

Back to poster listingPre-stimulus brain states modulate performance in perceptual tasks. Evidence largely comes from electroencephalogram (EEG) recordings showing that performance is correlated with EEG phase prior to stimulus onset. Here, it is explored whether or not: 1) spontaneous oscillations predict performance, 2) learning is associated with adjustments of pre-stimulus phase, and 3) temporal dynamics of stimuli determine the frequencies at which effects are seen. In Experiment 1, participants heard multiple 40-ms sinusoidal tones separated by short silent intervals. The task was to indicate the tone frequency pattern (e.g., low-low-high). Unlike prior work, no background sounds were employed to entrain or induce EEG oscillations. Pre-stimulus 7-10 Hz phase was found to differ between correct and incorrect trials ~200 to 100 ms prior to tone-pattern onset. Further, after sorting trials into bins based on phase, accuracy showed a clear cyclical trend. In Experiment 2, the same task was used except that a brief noise burst served as a warning signal 3 s before stimulus onset. Tone pattern identification improved over 2 days of training. Though there were increases in post-stimulus phase consistencies, and a replication of pre-stimulus effects from Experiment 1, there was no clear indication that learning was associated with increased pre-stimulus phase consistency. In Experiment 3, the rate at which tones were presented in the stimulus was manipulated. Though performance was once again correlated with pre-stimulus phase, the temporal dynamics of the stimulus had no clear impact on the frequency at which these effects were observed. This data adds to a growing body of evidence that oscillatory brain states impact auditory perception. Further, the work shows that these states do not need to be induced, and may be robust to changes in the temporal dynamics of stimuli and tasks.

Back to poster listingOne of the major hallmarks of James J. Gibson's (1979) ecological approach to perception is the notion the environment structures the energy within it. For each location in space, the energy arriving at any given location is defined by the objects in the environment and the location of the energy source. As one would imagine, each location within a setting is unique and a change in location will result in a change in the energy available at that location. Thus, the light contacting the eye is dependent on the arrangement of objects, the position of an observer, and the energy source. In brief, perception is based, in part, on the point of observation. Since then, a number of studies have found that visual judgments are significantly influenced by the particular height at which we see the world. Manipulations of eye height have significantly affected observer judgments of gap size, surface height, and distance, for example. If where we see the world from (i.e., eye height) affects visual judgments of the world, then it can be expected that where we hear from (i.e., ear height) influences auditory judgments. The present study investigated the impact of "observer" location on the ability to accurately judge the direction of a moving sound source. More specifically, participants were exposed to audio recordings of a pedestrian who was walking either up and down a set of stairs. The recordings were made from either ear, waist, or ankle height. In a second experiment, comparisons were made between egocentric and allocentric points of observation. The participant's task was to simply report the pedestrian's direction of motion. The findings are discussed in terms of the importance of the point of observation in affecting auditory perception of movement direction, in particular, and auditory spatial perception, in general.

Back to poster listingThe interaction of two objects or materials has the capacity to produce sound. The result of the interaction not only creates awareness an event has transpired, it also has the capacity to inform observers about the form or particulars of the event. It has been shown that observers are able to use the resulting acoustic event to make categorical distinctions (e.g., bouncing vs. breaking, sex of pedestrian, shape of a struck object) and metrical judgments (e.g., hardness of the striking object, length of a dropped object, the area of the struck object) of the object involved. With respect to vision, tau is believed to be informative about time-to-contact and time-to passby (i.e., the time at which an object will reach the point of observation). In terms of audition, there has been considerable debate as to the extent to which tau is informative. Controversy aside, tau still remains a potential avenue of research in terms of its derivation (henceforth referred to as tau-dot). Tau-dot provides the opportunity for perceivers to judge the severity of collision (hard or soft) between two objects. Participants in Experiment 1 were exposed to 8 distinct events, with each event differing in the force used to create that event. Participants judged (on a scale of 0 to 10) the perceived severity of the contact. Experiment 2 was identical, but with half the participants being provided a very brief description of the event. The results revealed that events deemed hard and soft were essentially perceived as such. It was also determined that the different events were not judged equivalently. Interestingly, participant awareness of the object involved in the event significantly affected perception of contact intensity. Discussion will be given to developing a coherent theory of event perception as well as future avenues for research.

Back to poster listingWhen participants are instructed to predict the time-to-contact of a looming object (an object approaching in depth), past literature has supported the existence of an auditory looming bias (e.g., Neuhoff, 1998; Rosenblum et al., 1987; Schiff & Oldak, 1990). A looming bias occurs when participants report the object as having arrived at the point of observation, when in reality, it is still some distance away. It has been theorized that humans perceive looming objects as closer than they truly are to facilitate survival by allowing time for evasive action to be made (e.g., Haselton & Nettle, 2006; Neuhoff, 2001). Studies supporting the existence of such phenomena suggest a significant amount of slippage between an observer's perception of a moving object's position and the position of the object in reality. However, in the vast majority of these studies, participants were exposed to recorded or virtually simulated stimuli, through the use of screens and speakers. They were also instructed to use a non-ecological (i.e., non-action-based) response method to indicate the position of the object (e.g. pressing a key on a keyboard). To address the potential of methodological error, the aim of the present study was to measure the effects of auditory looming bias on action-based participant responses to a live event. Using only auditory information, participants were instructed to perform either an action-based response (interception or evasion) or a non-action-based response (pressing a key or verbal response). The event participants responded to was a ball rolling down a track. Participants were seated such that the ball rolled toward or past them. Accuracy of response was measured at time-to-contact and time-to-pass-by as well as between the four response methods. The findings will be discussed in terms of the difference between action and perception as well as the impact of the point of observation.

Back to poster listingA number of studies involving auditory motion perception have been conducted, but are limited to one-dimensional changes in object position. However, in natural settings, sound producing sources vary in distance, azimuth, and altitude. Individuals perceive approaching sound sources as becoming louder and receding sound sources as becoming quieter. Only one known study to date focused on motion perception of object position in two dimensions. Johnston and Russell (2017) discovered that participants were approximately 95% accurate at judging motion in the horizontal dimension versus only 65% accurate at judging motion in the vertical dimension. The researchers failed to examine the impact that the location of the recording device had on perceptual judgments. The sound created by the pedestrian's feet contacting the ground (stairs) will be altered by the relative positions of the pedestrian and sound recording device. It is expected that variations in the position of the recording device will translate into different aspects of the acoustic energy array being detected. In the present study, participants were exposed to six sounds of three variations of audio recording device location (bottom, middle, and top of a staircase). The task of pedestrian was to identify whether the pedestrian approached or receded, and whether the pedestrian ascended or descended the staircase. The results suggest that recording at different locations capitalizes on our ability to make use of acoustic structure, such as reverberation, which is informative about the direction of a moving sound source.

Back to poster listingThe occurrence of a sound that deviates from the recent auditory past tends to cause an involuntary diversion of attention. Such attentional capture, which originates from an acoustic-irregularity sentinel detection system, generally leads to performance impairment on the ongoing cognitive task. Recent studies showed that this attentional response can be indexed by a rapid pupillary dilation response (PDR). However, the PDR has been observed exclusively following the presentation of a (deviant) sound that induced an acoustical change. This raises the question as to whether the PDR is a product of the novelty of the capturing event or its violation of learned expectancies based on any invariance characterizing the auditory background. The present study aimed to determine whether the PDR to a deviant sound could be elicited in the absence of acoustic novelty. To do so, subjects completed a visual serial recall task while ignoring an irrelevant auditory sequence. Standard sequences consisted of two alternating spoken letters (e.g., A B A B A B). A deviation was induced by either inserting a new letter (deviant change; A B A B X B) or by repeating one of the two letters (deviant repetition; A B A B B A). Recall performance was poorer in the presence of any type of deviant. More importantly, both letter change and letter repetition elicited a significant PDR. By demonstrating that a pupillary response was triggered in the absence of acoustic novelty, this study suggests that the PDR truly indexes attentional capture and that it is underpinned by higher-order, expectancy-violation detection cortical processes.

Back to poster listingWorking memory (WM) involves the storage and manipulation of information. A common task used to assess WM capacity is the n-back, which places a greater load on WM as the value of n increases. It is well-known that dorsolateral prefrontal cortex (DLPFC) activation increases as n increases, but only three studies have compared brain activation during the n-back as a function of stimulus modality (i.e., auditory or visual). These studies varied with regard to neuroimaging methods and load conditions employed. The earliest study, a positron emission tomography (PET) study of the 3-back, found no effect of stimulus modality on brain activation. In contrast, two subsequent functional magnetic resonance imaging (fMRI) studies of the 2-back found that DLPFC activation was greater during the auditory condition. The fMRI findings were interpreted as evidence that auditory WM places greater demand on the central executive than visual WM. In the current study, our aim was to assess two explanations for these discrepant findings: (1) whether the effect of stimulus modality on DLPFC activation is WM load-dependent, and (2) whether the effect of stimulus modality on DLPFC activation may have been driven by fMRI scanner noise. To do this, 16 younger adults completed an n-back with visual stimuli and one with auditory stimuli, both at four levels of WM load: 0-back (control), 1-back (easy), 2-back (medium), and 3-back (hard). Concurrently, activation of the DLPFC was measured using functional near-infrared spectroscopy, a quiet neuroimaging method. We found that DLPFC activation increased with WM load, but was not affected by stimulus modality at any WM load. This supports the earlier view obtained in the PET study that WM is modality-independent, and suggests that the two fMRI findings of greater DLPFC activation in the auditory n-back may have been caused by scanner noise making this condition more difficult.

Back to poster listingSynesthesia is a phenomenon in which particular sensory inputs elicit atypical perceptions in addition to the standard perception. Several studies have shown that cognitive processes are essential for synesthesia. In grapheme-color synesthesia, an ambiguous graphemic stimulus can induce different synesthetic colors when it is interpreted as a digit or a letter (Myles et al., 2003; Dixon et al. 2006). We investigated the influence of ambiguous pitch class information on sound-color synesthesia. Participants who were sound-color synesthetes (N = 12) listened to narrowband noises with a different pitch and a bandwidth of 400 cents that included five continuous pitch classes such that the pitch-class information was ambiguous (Fujisaki & Kashino, 2005). One month later, the participants engaged in the same task under two conditions: (1) the pitch class name was shown; and (2) the pitch class name was absent (identical to the first day). Presented pitch class names were within the bandwidth of the noise (specifically, lower or higher pitch class from the central one). We examined whether physically identical sounds elicited different synesthetic colors by changing the participants' interpretations of the pitch class. The result differed among synesthetes based on their absolute pitch ability. The synesthetic colors were significantly more different for pitch-class-presented condition than for pitch-class-absent condition from one month ago in synesthetes that performed well in the pitch-class naming task, whereas synesthetic colors were not significantly different among conditions in the other synesthetes. It is concluded that the influence of cognitive pitch-class interpretation could be observed even in sound-color synesthesia. However, this finding might be valid only for synesthetes with absolute pitch ability, who might usually associate synesthetic colors with pitch class information.

Back to poster listingDevelopmental synesthesia is a neurological condition in which certain perceptions or cognitions trigger supplementary perceptions (e.g. sounds evoke specific colors). Like absolute pitch, tone-color synesthesia is associated with enhanced music processing and increased connectivity in auditory cortex. The two conditions also show phenotypic and genotypic overlap as well as early childhood acquisition. Here, we report the case of NTM, a 27-years-old violinist with absolute pitch and automatic synesthetic associations between musical notes and specific colors both acquired in early childhood. Her synesthesia was validated by a standardized synesthesia battery. At age 23, NTM switched from classical (A440 tuning standard) to baroque (A415 tuning standard) music learning and experienced a serious incongruence in her synesthesia because of the semitone difference. Absolute pitch often interferes with adaptation to a new tuning standard but for NTM, her synesthetic color associations made the interference so intense that it prevented her from playing for a whole semester. Using voluntary training (coloring the scores with sound-congruent colors), NTM succeeded in learning new synesthesic color associations to notes in order to play baroque music. She also retained the initial set of color associations to notes in the A440 standard and could voluntarily switch from one set of associations to the other. The new synesthetic training also changed her letter-color synesthetic associations. This rare case underscores the plasticity of associations in tone-color synesthesia even in adults.

Back to poster listingIt has been investigated correlation of audio-visual perception. The goal of this investigation is finding of common tendencies of correlation between auditory perception and visual associations. Materials and Methods: it has been developed and conducted the test for this studying. 110 respondents were tested. The test was developed based on the cognitive features of auditory and visual perception. Along with other audio-visual associations, were researched the influence of timbre of musical instruments and ensembles on the occurrence of audio-visual associative correlations during the music perception. Results and Discussion: The following general conclusions about the influence of the timbre of the instrument on the occurrence of color associations can be made: Musical instruments with a rich timbre are associated with dark colors. Musical instruments with a clear timbre are associated with cool and light colors. Musical instruments with a distinct sound are associated with colors which have a high value of lightness. The following tendency was obtained during the study of the influence of the music ensembles on the associative relationship with colors: Clearer, more powerful and intense sounds of the musical composition are associated with clearer and more intense colours. The complicated sounds of jazz orchestras, choirs and electronic music are associated with complex and secondary colours. Saturated sounds are associated with colours with low value of lightness. The obtained data on the relationships will be presented in a poster in tabular form. Therefore, the study of the results reveals the following conclusions: cognitive perception has a determining role in associative audio-visual relationships. Associative relationships can be explained through the recognition of patterns at the cognitive level.

Back to poster listingTapping to the beat of a rhythmic stimulus, either music or a metronome, is a well-known task to measure rhythmic skills. Several musical synchronization tasks have been developed, for instance as part of recent batteries of rhythmic tests, such as the Battery for the Assessment of Sensorimotor Auditory and Timing Abilities (BAASTA) and the Montreal-Beat Alignment Test (M-BAT). Here synchronization to the beat is tested with different kinds of musical stimuli: BAASTA uses computer-generated classical music with a fixed tempo (100 bpm), while M-BAT contains more ecological music stimuli (i.e., real performances) that vary in terms of tempo (82bpm-170bpm) and genre (Merengue, Rock, Jazz, etc.). These two batteries have never received external validation, which was the aim of the present study. Forty-five participants performed the synchronization tasks from the two batteries and an unpaced tapping task (from BAASTA) to assess their spontaneous tempo. We calculated participants' synchronization consistency (i.e., how participants were variable in aligning their taps to the beat) for both synchronization tasks, and their spontaneous tempo (mean inter-tap interval; ITI) with the unpaced tapping task. Moreover, we computed motor variability, namely the coefficient of variation of the mean inter-tap interval (CV ITI). The results show a strong correlation between the synchronization consistency of the two batteries, despite the difference in complexity of the stimuli. This correlation is also independent of motor variability and spontaneous tempo, and there is no correlation between the participants' motor variability for the two batteries. This indicates that both tasks assess audio-motor coupling with music, and not general motor variability. This study provides support for the external validity of both batteries to assess synchronization to music.

Back to poster listingPerceptual and sensorimotor timing skills can be fully assessed with the Battery for the Assessment of Auditory Sensorimotor and Timing Abilities (BAASTA). The battery is a reliable tool for evaluating timing and rhythm skills revealing high sensitivity for individual difference. We present a recent implementation of BAASTA as an app on a tablet device. Using a mobile device ensures portability of the battery while maintaining excellent temporal accuracy in recording the performance in perceptual and motor tests. BAASTA includes 9 tasks (four perceptual and five motor). Perceptual tasks are duration discrimination, anisochrony detection (with tones and music), and a version of the Beat Alignment Test. Production tasks involve unpaced tapping, paced tapping (with tones and music), synchronization continuation, and adaptive tapping. Normative data obtained with the tablet version of BAASTA in a group of healthy non-musicians are presented, and profiles of perceptual and sensorimotor timing skills are detected using machine-learning techniques. The relation between the identified timing and rhythm profiles in non-musicians and general cognitive functions (working memory, executive functions) is discussed. These results pave the way to establishing thresholds for identifying timing and rhythm capacities in the general and affected populations.

Back to poster listingTraumatic brain injury (TBI) impacts millions of individuals each year. Following a TBI, individuals may experience physiological symptoms, such as oculomotor dysfunction, and cognitive symptoms, such as deficits in memory, attention, and higher order cognitive abilities. There is limited research on the impact of auditory distraction on functional task performance (e.g., reading) following a TBI. This study examined effect of auditory distraction on memory and reading performance in adults with and without mild traumatic brain injury (mTBI). Twenty-six healthy controls and 23 adults with a history of mTBI completed a short-term memory task, a working memory task, and an academic reading comprehension task. All tasks were administered with and without auditory distraction. Participants' eye movements were tracked during the academic reading comprehension task. Compared with healthy controls, individuals with mTBI recalled fewer items on a short-term memory task in the presence of auditory distraction, but not the working memory task. On the academic reading comprehension task, individuals with mTBI performed worse than healthy controls in the presence of auditory distraction, but only on specific types of content. Eye movement patterns corroborated the behavioral data and revealed that individuals with mTBI experienced greater difficulty than healthy controls in the presence of auditory distraction on specific types of content. These findings indicate that experiencing a mTBI may have lasting effects on cognitive abilities, particularly abilities that are recruited for functional tasks, such as academic reading.

Back to poster listingHead trauma can lead to problems with the ear and auditory pathway. These problems can involve tympanic membrane perforation, fragments in squamous epithelium, damage to the ossicles, or ischemia of the cochlear nerve. It is common for behavioral checklists, for concussion or head injuries, to include an item about hearing difficulty. In the present study, 152 introductory psychology students completed a survey in which they indicated if they had ever had a concussion or sustained a head injury. Approximately one-third (35.53%) of the sample had a history of head trauma. The Hearing Screening Inventory was also part of the survey. Overall, participants who had a previous head injury reported more hearing difficulties than participants with no previous head injury (t(150) = 2.15, p < .02). Although this difference had a moderate effect size (d = .37), it suggests that hearing difficulties may linger since participation was not limited to those having a recent head injury but was open to anyone who had a head injury at any point in time. An examination of specific hearing difficulties revealed that the difference between the two groups was based almost exclusively on their ability to distinguish target sounds from background noises. Specifically, the ability to understand words in music (t(150) = 2.36, p < .01; d = .40) and to isolate an individual speaking from background conversations (t(150) = 2.44, p < .01; d = .41) differentiated the two groups. This finding is consistent with Hoover, Souza, and Gallun (2017) who also found that head injury can impair target and noise processing.

Back to poster listingThe majority of people can easily track the beat of simple and complex auditory sequences (e.g., a metronome or music) and move along with it. There is growing evidence that these rhythmic abilities are impaired in children with neurodevelopmental disorders, such as developmental dyslexia or ADHD. Rhythm impairments are shown with a variety of perceptual and sensorimotor measures. However, due to the heterogeneity across measures, it is unclear whether rhythmic difficulties are per se a hallmark of a particular disorder or rather the result of a common cognitive deficit in memory, attention, or executive functions. In this study, to test the possibility that profiles of rhythmic abilities might characterize neurodevelopmental disorders, we analyzed large sample of children with neurodevelopmental disorders who underwent the same rhythmic tests. Children (n = 50, with ADHD or dyslexia; n = 40 controls) were tested with the Battery for the Assessment of Auditory Sensorimotor and Timing Abilities (BAASTA), a battery assessing perceptual and sensorimotor timing abilities. We found that rhythm perception does not discriminate ADHD children from dyslexics. In contrast, ADHD children display higher motor variability than children with dyslexia and controls, as well as higher variability in synchronization, especially when ADHD have DCD (developmental coordination disorder) comorbidity. These differences persist when controlling for children's general cognitive functioning. These results will pave the way to new methods for identifying different rhythmic profiles in children with neurodevelopmental disorders and for developing individualized rhythm-based interventions.